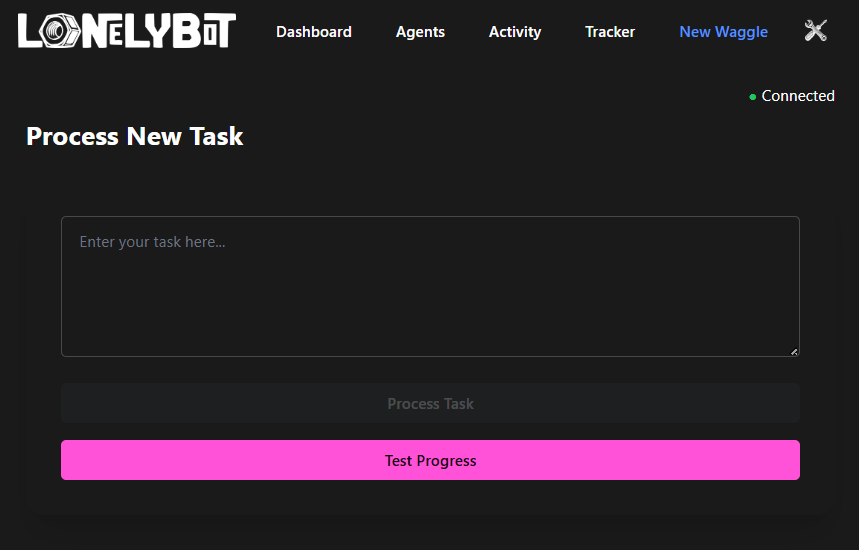

Orchestrate AI Workflows in Plain English

Type what you need. We'll chain the right agents and run it for you—no code, no fuss.

How It Works

Go from request to result in three simple steps.

1. Tell Us Your Task

Just describe what you need—research, write, analyze, automate.

2. We Pick the Agents

Lonelybot selects and chains the perfect AI agents for you.

3. Get Instant Results

Watch progress in real time. Download or share with one click.

Core Capabilities

Key features of Hive's AI orchestration platform.

Hive transforms your natural-language requests into modular AI workflows, combining specialized agents with live progress updates and full auditability.

- Natural-Language → Structured Workflow

- Convert plain-English requests into ordered, dependency-aware task plans automatically.

- Rich Agent Library & Chaining

- Leverage a plug-and-play suite of AI agents that seamlessly hand off outputs to one another.

- Real-Time Progress

- Stream live task updates via WebSockets so you always know what's happening.

- Audit & Replay

- Review every AI prompt, response, and task result to ensure full traceability and replayability.

Commonly asked questions

If your question is not on the list, feel free to get in touch here or open up an issue on GitHub.

Hive converts your free-form requests into structured, multi-step AI workflows, choreographing specialized agents to handle each task seamlessly.